Projekte

Hier werden verschiedene Projekte vorgestellt, die im Rahmen des RoboCup bearbeitet werden oder fertiggestellt wurden. Zum Teil enthalten sind Projekte der Vorlesung "Image Understanding" sowie des Wahlfachs "Interactive mobile Robots".

Hier finden Sie die Publikationen von Professor Dr. rer. nat Matthias Rätsch.

Ganz aktuell können Sie hier unsere Open Source Programme herunterladen.

- QR-Code/OCR control and speech output

Reading QR-code and OCR.

Using text-to-speech (TTS) software for all outputs, like reaching the goal, as well as error messages or the input from QR-code or OCR.

- 16SS:

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Implement a number detection for the floor number in the lift.

Here you find a short video.

Here you find a summary.

Here you find the documentation. - Use of commercial Ivona TTS

Here you find a short video.

Here you find a summary.

Here you find the documentation. - Localication based on QR code and OCR

Leonie reads QR-codes and interacts with the user.

Here you find a short video.

Here you find a summary. - Use of open source software Mary TTS

Hier you find a short video.

Hier you find a summary. - Using OpenCV for OCR

Here you find the documentation.

- Gesture detection

Using a 3D sensor to detect body gestures.

- Gesture detection for SCITOS

With help of KineticSpace (©Professor Wölfel) gestures are learned, detected and recognized and the Scitos reacts to it.

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Theoretical study to the use of KineticSpace (©Professor Wölfel)

Here you find a summary.

Here you find the documentation. - Waving detection with MSKinect

Detecting primitive gestures like waving to trigger SCITOS actions, using the MSKinect.

Here you find a summary.

Here you find the documentation. - Gesture detection for SCITOS

With help of KineticSpace (©Professor Wölfel) gestures are learned, detected and recognized and the Scitos reacts to it.

Here you find a summary.

Here you find a short video.

Here you find the documentation (for the PW please contact Felix Ostertag) - Gesture detection

Using the software Kinetic Space gestures are learned and can be recognized afterwards.

Here you find a summary.

Here you find a short video.

Here you find the documentation (for the PW please contact Felix Ostertag)

- LOOK AT ME

Leonie recognizes people and looks at them.

In project 1 the microphone array from the MS Kinect is used to detect where the sound comes from. Leonie reacts to whistling for example.

In project 2 the FaceVacs SDK from Cognitec is used to find the faces.

Project 1:

Hiere you find a short video.

Here you find a summary.

Here you find the documentation.

Project 2:

Here you find a short video.

Here you find a summary.

Here you find the documentation.

Grundlagen:

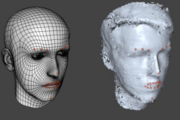

- FACE RECOGNITION

Leonie recognizes persons using the face recognition software FaceVacs SDK from Cognitec.

- Can I help you?

Trade Show Robot: Robot presents facial recognition and analysis at trade fairs

Using the Cognitec SDK, the basis of a trade show robot was created. In addition to a guide for the installation and use of the SDK an example of the dialogue as well as a first example program was developed.

Here you will find the resulting package.

Here you will find the short summary.

- EmotionDetection

Detection of human emotions and facial expressions.

- 16WS: Emotion detection with SVM

Here you find a summary.

Here you find a short video.

Here you find the documentation. - 16SS: Emotion detection with CNN

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Evaluation of Kinect 2 for emotion detection compared to Kinect 1

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Emotion detection with a neural network

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Emotion detection based on MSKinect and faceshift SDK

Emotion analysis using a Microsoft Kinect Sensor and an C++ application that receives data from faceshift's network stream.

With faceshift, 48 action units of the face can be tracked and measured instead of 6 with Kinect SDK.

Here you find a summary.

Here and here you find a short video. - Emotion detection with MSKinect

Here you find the documentation.

Here you find a summary.

- Active Closer Inspection System

(COG Drittmittelprojekt)

Based on an active camera system persons are detected and then tracked via tracking-algorithms.

- 16SS: Compress and merg of existing stuff and demo zoom cam player

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Dual-Cam-System-Tracking

On a wide-angle camera a person is tracked, while the PTU is used to fokus a high-res camera on the person.

Here you find a summary.

Here you find a short video.

- Follow me

SCITOS is following a person, including turning and driving backwards, maintaining a constant distance .

- New Hardware

Different projects concerning possible or actual hardware for SCITOS

16WS:

Ricoh 360° + HTC Vive

Here you find a summary.

Here you find a short video.

Here you find the documentation from.

16SS:

SphereCam

Here you find a summary.

Here you find a short video.

Here you find the documentation.

- Multi-level navigation and elevator control

Navigating over multiple levels with the SCITOS, including using the elevator.

- Kontakt und Ansprechpartner

Prof. Dr. rer. nat. Matthias Rätsch

Gebäude 4

Raum 4-110

Tel. +49 7121 271-4046

Mail senden »

- Seite drucken

- Seite teilen