Projekte - Prof. Rätsch

Folgende Thesis- und Projektthemen sind Vorschläge für Arbeiten im Rahmen von Zusatzaktivitäten (2 ECTS), Laborprojekten (3 oder 6 ECTS), Projektmaster (12 ECTS), Bachelor- bzw. Masterarbeiten (intern oder bei Industriepartnern), sowie Promotionsthemen.

Sprechen Sie mich bei Interesse für ein spezielles Thema oder zur Vorstellung der Liste der aktuellen Themen persönlich an oder senden Sie mir eine E-Mail zur Terminabsprache.

Beispielthemen für Thesen- Cognitec System GmbH (Weltführer für Face Analysis):

- „Monokulare SLAM-Verfahren für die Mensch-Maschine-Interaktion“

- „Verbindung von Structure from Motion und Shape from Shading Verfahren für Face Analysis“

- “Active Closer Inspection System”

- Robert Bosch GmbH

- “SLAM-Verfahren zur Navigation und Lokalisierung in der Service Robotics“

- „Omni-Kamera Systeme für Autonome Roboter“

- „Automatischer Produktionsassistent APAS für die Mensch-Roboter-Kollaboration“

- Bosch eBike Systems GmbH

- „Visuelles System zur Erhöhung der Sicherheit von eBikes“

- „Machine Learning für einen eBike-Assistenten“

- Daimler AG

- „Mensch-Roboter-Kollaboration in der Automotive Industrie“

- „Convolutional Neural Networks für Fahrerassistenzsysteme“

- Manz AG

- „Entwicklung eines Selbsttests für eine innovative Laser-Scanner-Optik mit einer koaxialen Kamera“ (Pdf)

- „Optimierung der Objekterkennung in der Fließfertigung mit einem Delta Roboter“

- Zudem bieten wir eine Reihe von internen BA- und MA-Arbeiten an (s. folgende Reiter). Für eine aktuelle Liste aller Themen und mehr Details zu den Vorschlägen bitte einen Termin vereinbaren: E-Mail!

Gelaufene Projekte

- QR-Code/OCR control and speech output

Reading QR-code and OCR.

Using text-to-speech (TTS) software for all outputs, like reaching the goal, as well as error messages or the input from QR-code or OCR.

- Implement a number detection for the floor number in the lift.

Here you find a short video.

Here you find a summary.

Here you find the documentation. - Use of commercial Ivona TTS

Here you find a short video.

Here you find a summary.

Here you find the documentation. - Localication based on QR code and OCR

Leonie reads QR-codes and interacts with the user.

Here you find a short video.

Here you find a summary. - Use of open source software Mary TTS

Hier you find a short video.

Hier you find a summary. - Using OpenCV for OCR

Here you find the documentation.

- Gesture detection

Using a 3D sensor to detect body gestures.

- Gesture detection for SCITOS

With help of KineticSpace (©Professor Wölfel) gestures are learned, detected and recognized and the Scitos reacts to it.

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Theoretical study to the use of KineticSpace (©Professor Wölfel)

Here you find a summary.

Here you find the documentation. - Waving detection with MSKinect

Detecting primitive gestures like waving to trigger SCITOS actions, using the MSKinect.

Here you find a summary.

Here you find the documentation. - Gesture detection for SCITOS

With help of KineticSpace (©Professor Wölfel) gestures are learned, detected and recognized and the Scitos reacts to it.

Here you find a summary.

Here you find a short video.

Here you find the documentation (for the PW please contact Felix Ostertag) - Gesture detection

Using the software Kinetic Space gestures are learned and can be recognized afterwards.

Here you find a summary.

Here you find a short video.

Here you find the documentation (for the PW please contact Felix Ostertag)

- LOOK AT ME

Leonie recognizes people and looks at them.

In project 1 the microphone array from the MS Kinect is used to detect where the sound comes from. Leonie reacts to whistling for example.

In project 2 the FaceVacs SDK from Cognitec is used to find the faces.

Project 1:

Hiere you find a short video.

Here you find a summary.

Here you find the documentation.

Project 2:

Here you find a short video.

Here you find a summary.

Here you find the documentation.

Grundlagen:

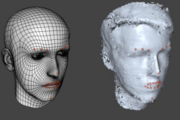

- FACE RECOGNITION

Leonie recognizes persons using the face recognition software FaceVacs SDK from Cognitec.

- Can I help you?

Trade Show Robot: Roboter präsentiert Gesichtserkennung und -analyse auf Messen

Unter Verwendung des Cognitec SDKs entstand die Grundlage eines Trade-Show-Robots. Neben eines Leitfadens zur Installation und Verwendung des SDKs entstand sowohl ein beispielhafter Dialogverlauf, sowie ein erstes Beispielprogramm.

Hier finden Sie das entstandene Paket.

Hier finden Sie eine Kurzzusammenfassung.

- EmotionDetection

Detection of human emotions and facial expressions.

- Evaluation of Kinect 2 for emotion detection compared to Kinect 1

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Emotion detection with a neural network

Here you find a summary.

Here you find a short video.

Here you find the documentation. - Emotion detection based on MSKinect and faceshift SDK

Emotion analysis using a Microsoft Kinect Sensor and an C++ application that receives data from faceshift's network stream.

With faceshift, 48 action units of the face can be tracked and measured instead of 6 with Kinect SDK.

Here you find a summary.

Here and here you find a short video. - Emotion detection with MSKinect

Here you find the documentation.

Here you find a summary.

- Active Closer Inspection System

(COG Drittmittelprojekt)

Based on an active camera system persons are detected and then tracked via tracking-algorithms.

- Dual-Cam-System-Tracking

On a wide-angle camera a person is tracked, while the PTU is used to fokus a high-res camera on the person.

Here you find a summary.

Here you find a short video.

- Follow me

SCITOS is following a person, including turning and driving backwards, maintaining a constant distance .

- Multi-level navigation and elevator control

Navigating over multiple levels with the SCITOS, including using the elevator.

Kontakt

Prof. Dr. rer. nat. Matthias Rätsch

Sprechstunde:

Dienstags, 19.00 Uhr online und nach VereinbarungGebäude 4

Raum 4-110Tel. +49 7121 271-4046

Prof. Dr. rer. nat. Matthias Rätsch

Alteburgstr. 150

72762 ReutlingenGebäude 4 , Raum 4-110

Tel. +49 7121 271-4046

Fenster schließen

Fax +49 7121 271-7004

- Kontakt und Ansprechpartner

Prof. Dr. rer. nat. Matthias Rätsch

Gebäude 4

Raum 4-110

Tel. +49 7121 271-4046

Mail senden »

- Weiterführende Links

- Seite drucken

- Seite teilen